For my senior thesis, I decided to combine my passions for both Computer Science and Music by developing an image analysis application that composes algorithmic music pieces.

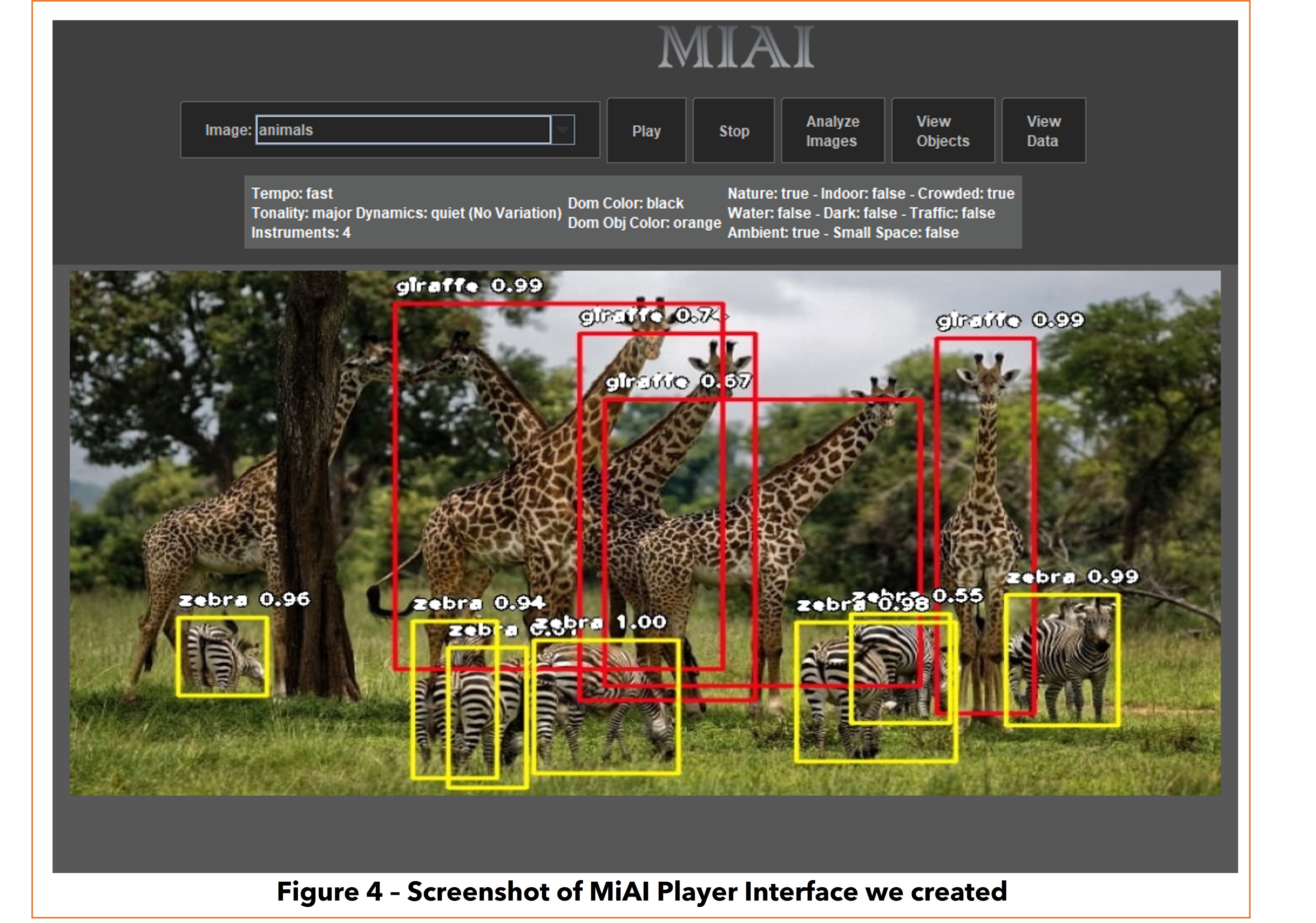

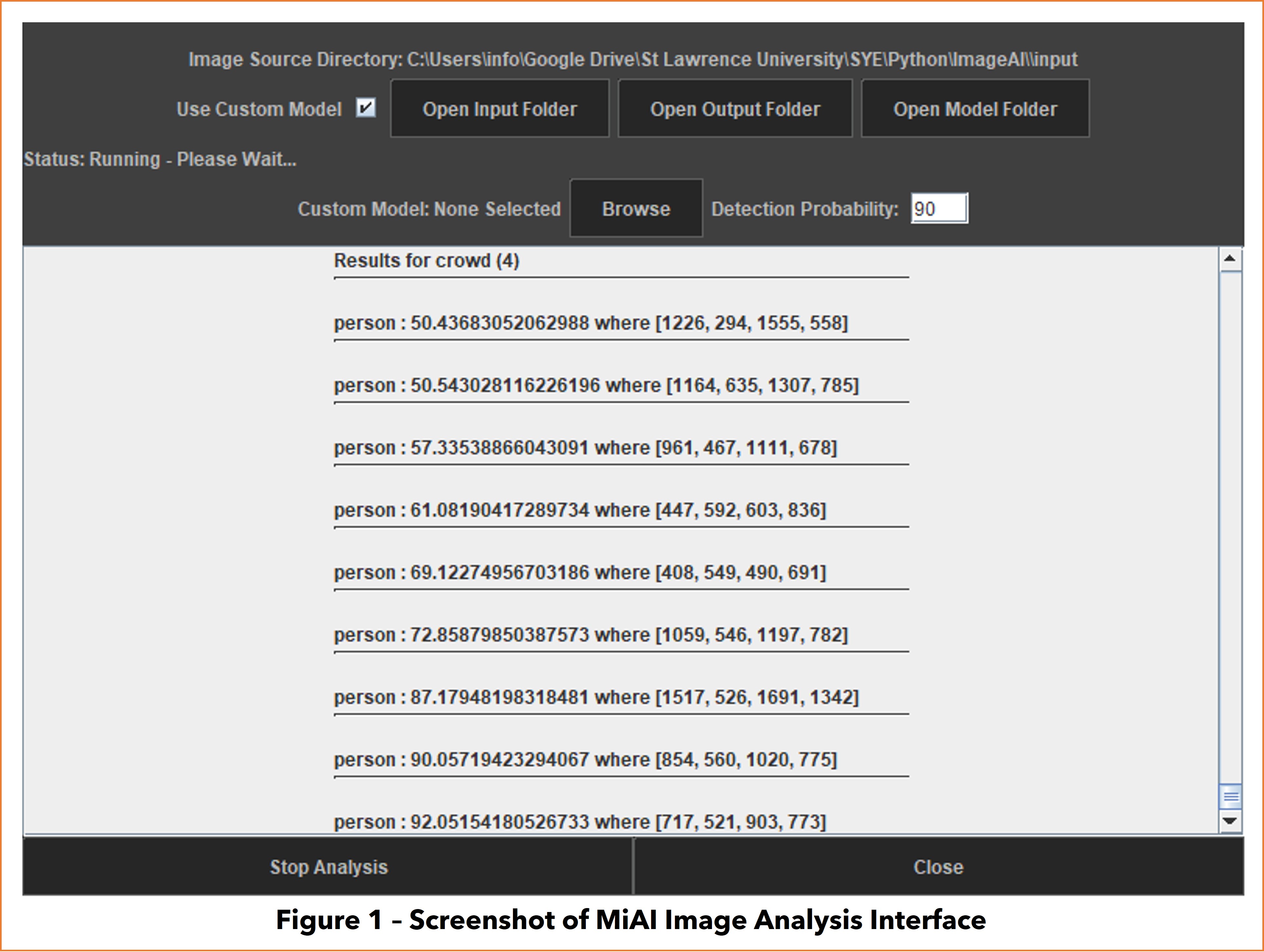

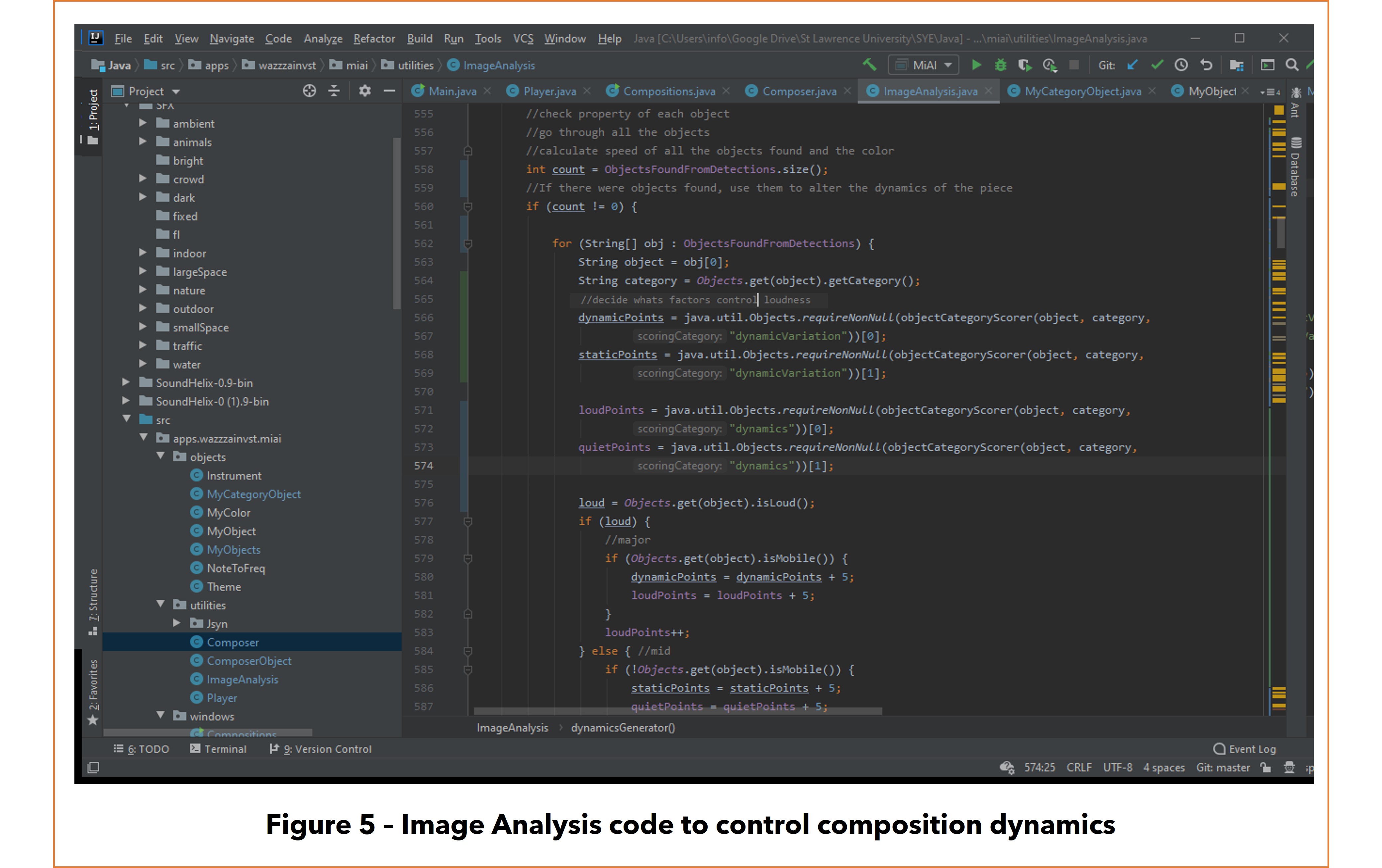

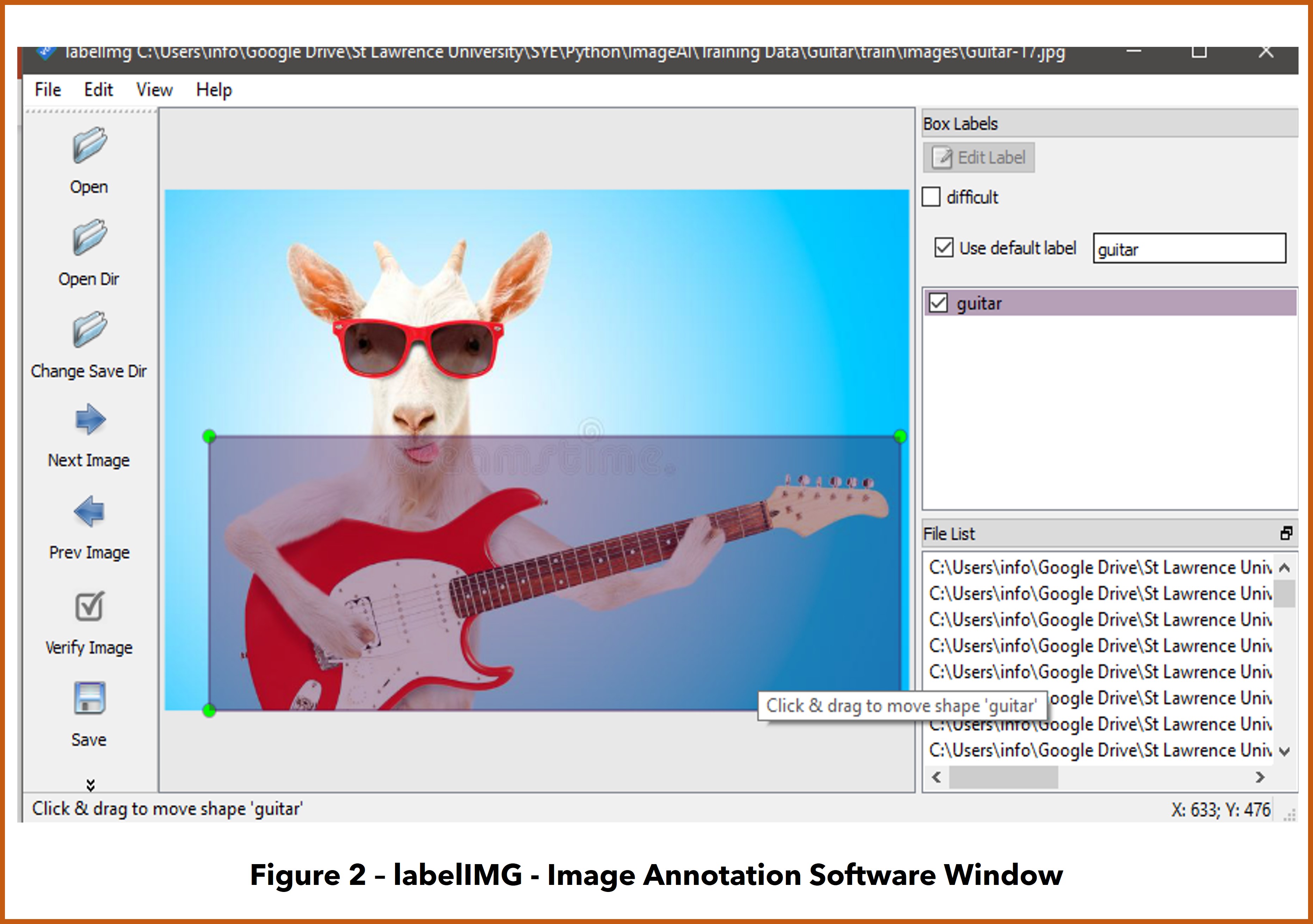

The application uses Python Artificial Intelligence and Java music libraries based on objects, colors, and activities in frames

This is an on-going project which I wish to further develop in the future with more sophisticated Artificial Intelligence systems.

Libraries: ImageAI (Python),Tensorflow (Python), OpenCV (Python), Keras (Python), Jsyn Audio Synthesis

Choong-Soo Lee (Advisor)